Staying Human

Staying human in the age of acceleration

A project exploring what really matters as the world becomes harder to see clearly.

We live in a moment where our tools feel weightless and intelligence appears on demand. But behind the surfaces of our lives sit powerful forces - cultural, biological, technological - that shape us.The Staying Human Project is dedicated to seeing those forces clearly. Through books, essays and interactive tools, it explores how ancient wisdom and modern understanding can help us stay grounded as the world accelerates.

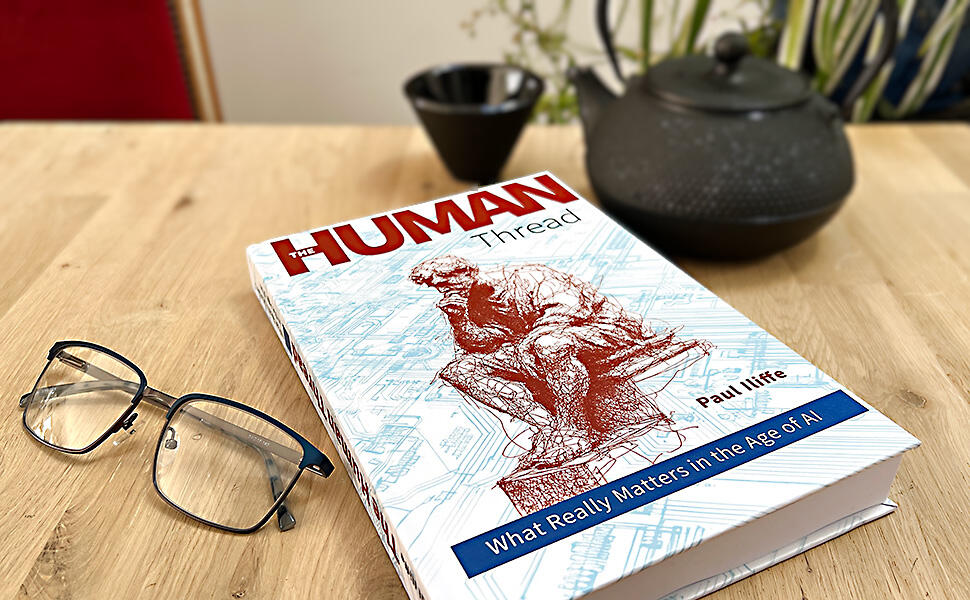

The Book

The Human Thread

40 frameworks for what really matters. 500+ sources across philosophy, psychology and culture - distilled into clear insights to navigate modern life.

The Essays

Latest writing

Regular writing on attention, identity, meaning and the invisible systems shaping modern life. Deep dives into the frameworks from the book.

What is the Staying Human Project?

The Staying Human Project explores what truly matters in an age of accelerating technology. Through books, essays and tools, it reveals the visible and invisible forces shaping human life - from ancient wisdom to biological intelligence to the hidden infrastructure of our digital world.The project is not technology-first. It is human-first.

The central questions are:

How do humans make sense of accelerating systems?

What does it mean to live wisely when the world becomes harder to see clearly?

Which forms of wisdom endure regardless of technological cycles?

The Invisible Spectrum

At the heart of this project is a simple observation: much of what shapes our lives now happens beyond our perception.The Invisible Spectrum is the range of forces, consequences and meanings that fall outside our immediate awareness - yet determine so much of what we become.

This range includes:

Unseen cultural forces we inherit without choosing

Hidden consequences of our technological choices

Biological systems we depend on but rarely notice

Digital and algorithmic layers that shape behavior invisibly

The cognitive blind spots that shape our perception

About the author

Paul Iliffe

Paul spent two decades as a strategist for international advertising agencies, finding clarity within complexity and distilling insights into memorable ideas.When AI began reshaping everything, he asked: what human wisdom must we preserve? The Human Thread is his answer - a synthesis of enduring frameworks and for navigating change.Paul is also the founder of The Exotic Teapot, a specialty tea and teaware company. He lives in Bournemouth, UK.

Stay connected

Join the newsletter for insights on staying human in the age of AI, new essays and reflections on what really matters.

No spam. Unsubscribe anytime. Typically 1-2 emails per month.

The Human Thread

What Really Matters in the Age of AI

Timeless Wisdom. 40 frameworks for what really matters.

A clear roadmap for staying human as technology accelerates.

About the book

We are living through the biggest shift in human capability since the Industrial Revolution, yet what makes human judgement valuable has not changed. As artificial intelligence reshapes work, relationships and knowledge, we need to identify the core values worth retaining.The Human Thread presents 40 enduring frameworks that have guided humanity across centuries and cultures, showing how to apply them to the choices we face today. This synthesis draws on 500+ sources across over 175 disciplines, connecting ancient philosophy, culture, science and psychology with contemporary research.The book moves from self-understanding through relationships and context to the threshold where human and artificial intelligence meet. It shows what is possible when human judgement stays at the centre and examines what threatens that possibility.

Who this book is for

Readers who love big-ideas nonfiction grounded in evidence, clarity and human meaning

People curious about what truly matters when technology accelerates

Anyone navigating questions of identity, meaning, work and relationships in changing times

Thinkers who appreciate clear frameworks drawn from philosophy, psychology, culture and science

Those seeking timeless wisdom rather than trends or self-help

Inside the book

Part 1:

The Self

Part 2:

Relationships

Part 3:

World & Beyond

Parts 4-6:

The AI Age

Identity, habits, attention, emotional intelligence, health, meaning, learning, recognition, resilience and creativity. Building the foundation from within.

Love, friendship, family, communication, trust, conflict, community, ethics, justice and cultural navigation. How we connect, cooperate and thrive together.

Nature, food, sustainability, technology, economics, story, art, conflict, mortality and legacy. Our relationship with the planet, systems and time itself.

Being human when intelligence expands beyond us. Partnership principles for Human and AI collaboration. What lies beyond partnership.

Features

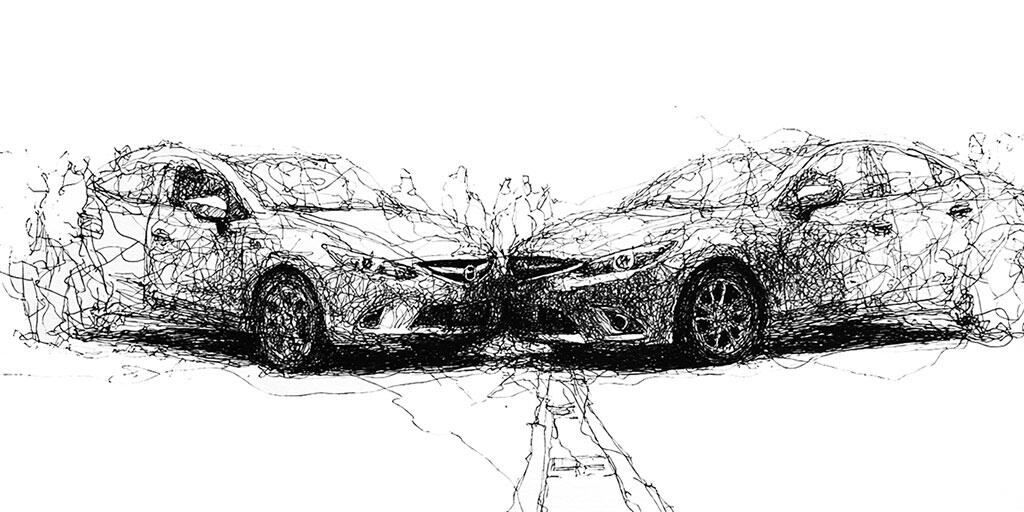

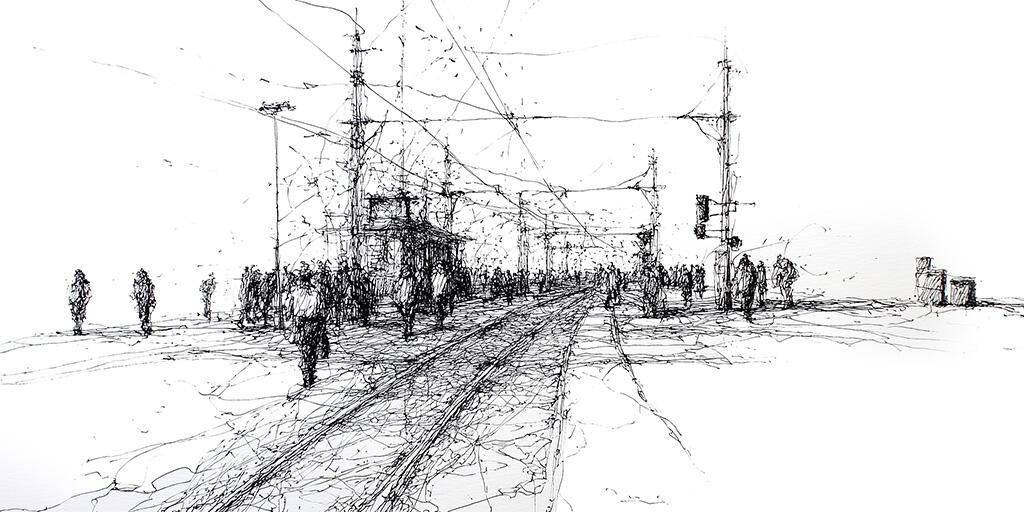

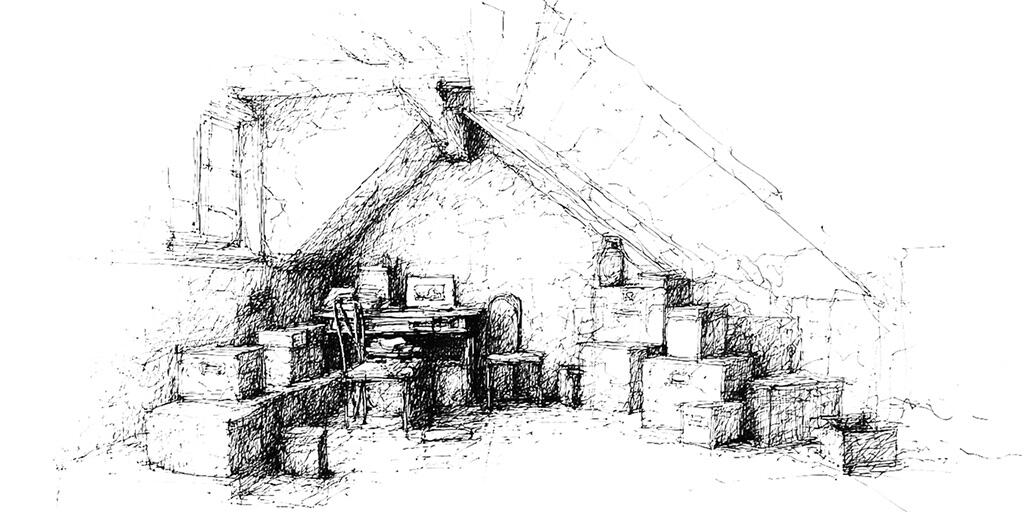

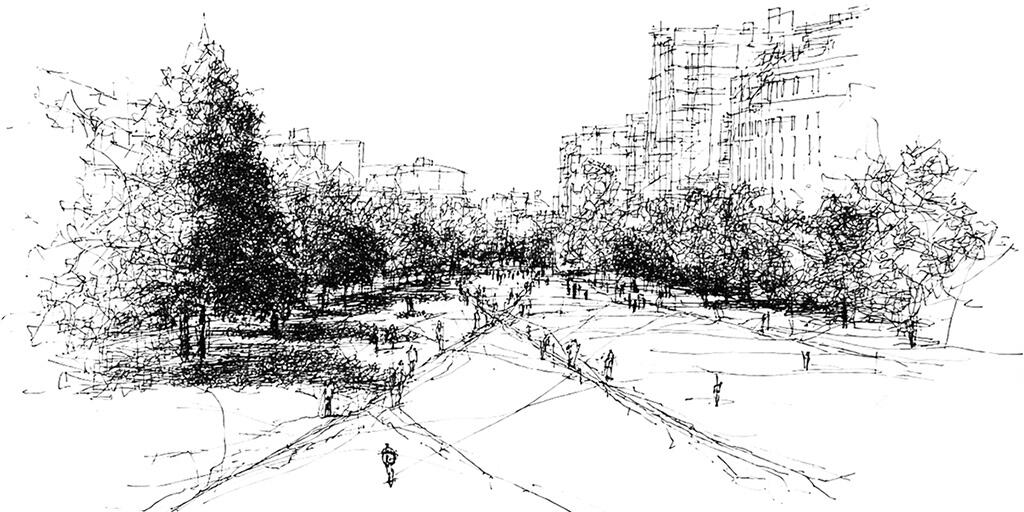

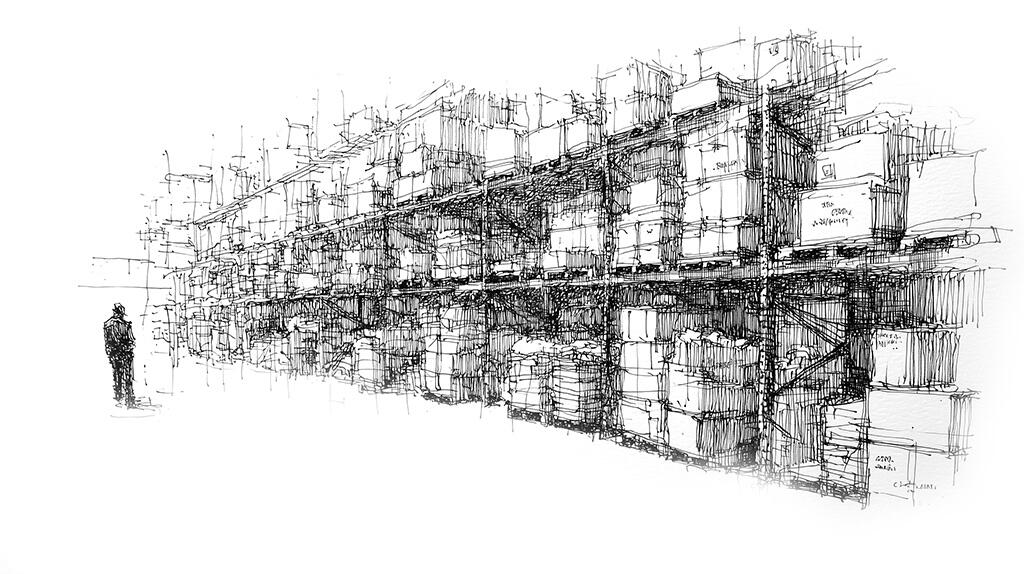

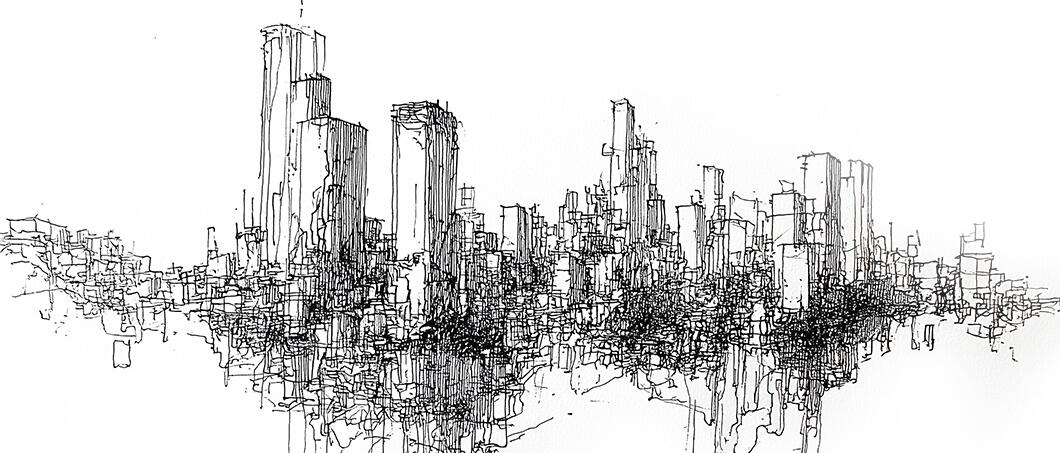

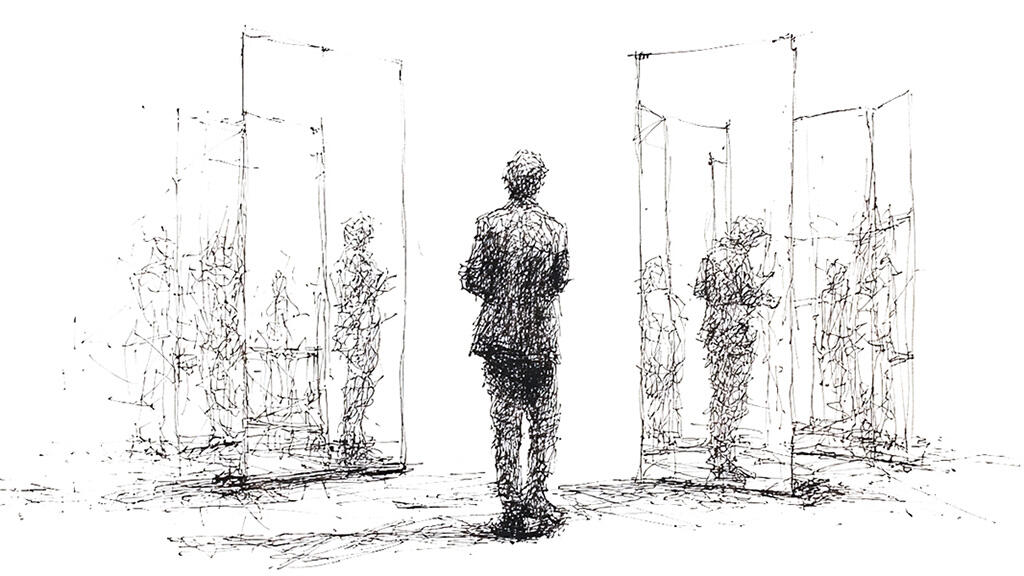

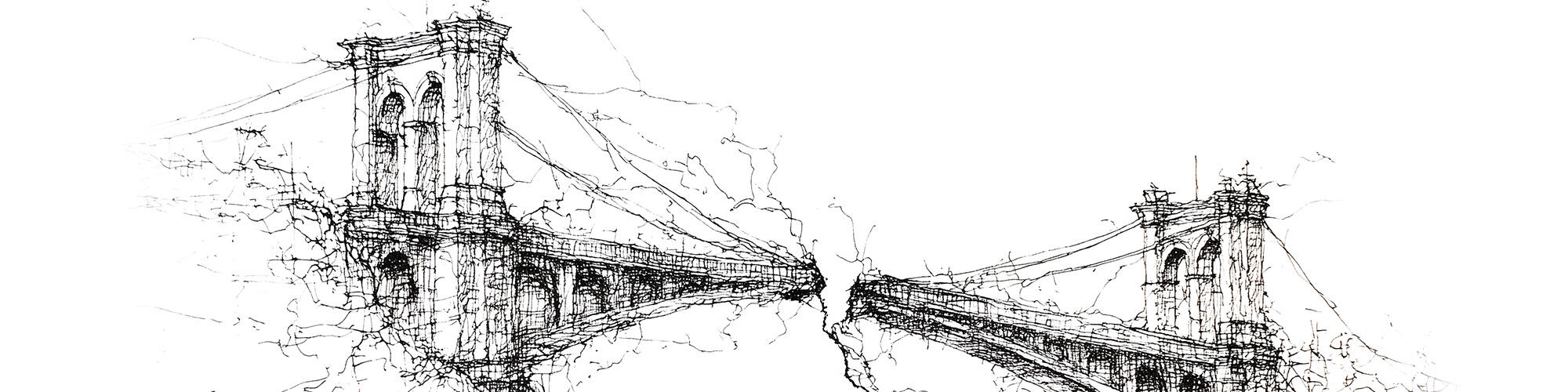

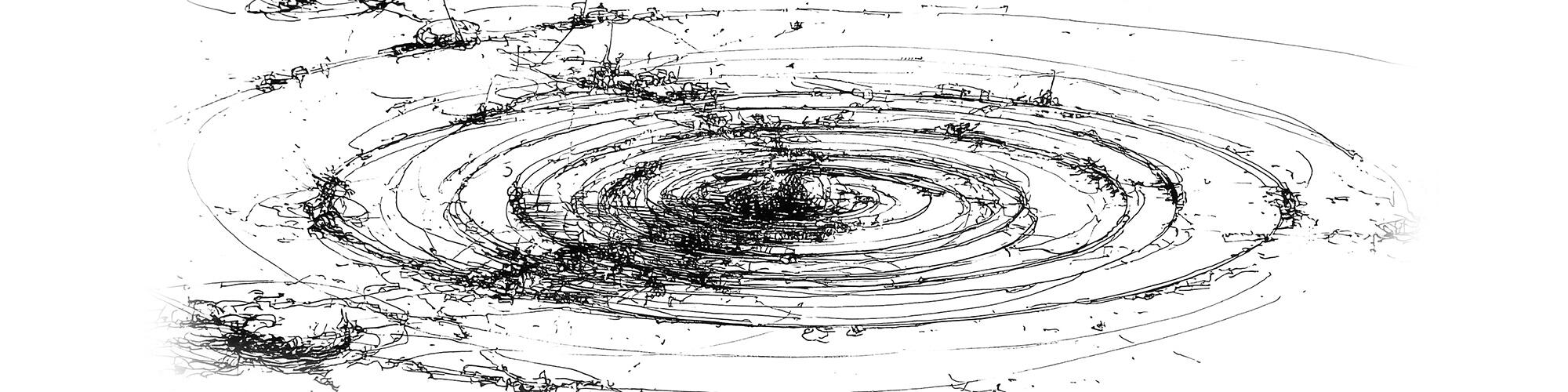

Beautifully Illustrated

Each of the 40 frameworks is anchored by a custom pen-and-ink illustration. Visual metaphors that illuminate complex concepts and create memorable touchpoints for reflection. This isn't decoration - it's designed clarity that helps ideas stick.

Thoroughly Researched

Every framework draws on verified sources across philosophy, psychology, science, culture and more. 500+ sources across 175+ academic disciplines synthesised into clear, accessible insights. Rigorous thinking without jargon or pretension.

Timeless frameworks for navigating what really matters

Kindle £6.99 | Paperback £9.99 | Hardcover £16.99

(Christmas promotional prices)

Also available on Amazon US, CA, AU, DE, FR, ES, IT

Latest writing

Timeless wisdom and frameworks for staying human. Essays on the invisible infrastructure behind AI, attention, identity, meaning and connection.

The Bridge Nobody Built

The Quickening

The Two Attics

The Paths You Actually Walk

The Bridge at Christmas

The Invisible Spectrum of Commerce

The Reins We Dropped

The Silent Factories Behind Our Screens

Identity in the Age of Intelligent Mirrors

Attention: The First Human Discipline

Where this work began?

The Vanishing Places Where We Meet

Stay connected

Join the newsletter for insights on staying human in the age of AI, new essays and reflections on what really matters.

No spam. Unsubscribe anytime. Typically 1-2 emails per month.

Why these AI impact calculators exist

AI feels weightless. It isn't.

Technology accelerates faster than our intuition can follow. These calculators slow the world down just enough for the mind to grasp its scale. You type a question, wait a moment and words appear.There is no engine noise, no heat, no movement. But behind that silence sits an infrastructure closer to heavy industry than software. Vast energy demand. Constant cooling. Thousands of tonnes of physical equipment. And a concentration of resources that rivals nation-states.

Calculators for what really matters

Multiple lenses on the same truth: AI has costs and risks that can't be waved away. Physical. Material. Economic. Systemic. Each reveals a dimension of the invisible infrastructure shaping our future.

AI Energy

The Physics

Calculate the energy, water and carbon footprint of AI - from a single prompt to a billion daily users. Start with your personal impact, then widen the lens to see the impact on a planetary scale.

AI Materials

The Geology

Explore the physical reality behind AI hardware - from refined metals to the ore, rock and tailings that must be excavated. A GPU weighs kilograms; its extraction footprint weighs tonnes.

The England Power Grid

By 2027, AI could power England for 5+ months annually

If 1 billion people use AI daily as projected, annual energy consumption would reach approximately 280 TWh - enough to power every light, hospital, home, and factory in England (population 56 million) for 150+ days. Nearly half a year.This isn't hypothetical future demand - it's where current trajectory leads. The infrastructure powering "ask AI" rivals the energy needs of entire nations.

The Transatlantic Tunnel

Training AI moves earth from London to Chicago

By 2027, the global AI industry could conduct 40-50 major training runs annually. The materials required would move earth equivalent to 130+ Channel Tunnels - enough to dig 6,500 km underwater from London to Chicago.AI is heavy industry disguised as software. The digital is built from dirt. Every model requires extracting raw material hundreds of times heavier than the final product.

Scale of Power

The Economics

Individual fortunes that rival national GDPs. Corporate treasuries that exceed most countries. When wealth reaches this scale, incentives shape the future more than institutions.

What if it Stopped?

The Backup

What happens when the services millions of businesses depend on... just stop. Not a prediction. It's like checking the exits before the theatre gets crowded.

The Pre-Political Shift

Infrastructure gets built before democratic systems even notice

AI infrastructure can now be built, scaled, and directed before democratic systems engage. Governments regulate after visibility. Capital builds before visibility.

By the time political processes engage - public debate, regulatory frameworks, parliamentary oversight - the infrastructure is already live and costly to unwind. This creates a permanent lag between capability and governance.

Time Beats Government

Even 100% intervention only reduces impact by 66%

When a major LLM provider suddenly fails, moving government intervention from 0% to 100% in the 7-day collapse only reduces job losses from 14 million to 4.6 million. The bottleneck isn't money or authority - it's time.

Government can provide cash and coordination, but cannot recreate months of AI training or rebuild skills workers have forgotten. There is no deposit insurance for "ChatGPT will work."

More key insights from the calculators

Multiple dimensions. One truth.

Why do these calculators stop at 2027?

Because that's the last moment we can still picture the scale.

At 1 billion users, we can say "London's water for a month" and

you can picture it. Beyond that, you can't.

AI Energy calculator

The Physics

Every query we make, every interaction with AI, draws power from somewhere.

This calculator reveals the energy, water, and carbon footprint of artificial

intelligence - from your personal daily usage to planetary scale.Start with your own habits. Then click 'widen the lens' below to see what a billion daily users means for national grids, water basins, and carbon budgets.

Explore the Calculators

Stay connected

Join the newsletter for insights on staying human in the age of AI, new essays and reflections on what really matters.

No spam. Unsubscribe anytime. Typically 1-2 emails per month.

AI materials calculator

The Geology

AI is heavy industry disguised as software. Behind every data centre

sits a supply chain that moved mountains - literally.This calculator shows the physical reality of AI hardware: the rare earth

metals, copper, and materials required, and the earth that must be

excavated to obtain them.For every kilogram of refined material, hundreds of kilograms of earth

were moved. This is the extraction footprint we never see.

Explore the Calculators

Stay connected

Join the newsletter for insights on staying human in the age of AI, new essays and reflections on what really matters.

No spam. Unsubscribe anytime. Typically 1-2 emails per month.

The scale of power calculator

The Economics

When individual wealth reaches nation-state scale, it shapes infrastructure independent of markets, governments or democratic oversight.This calculator reveals the concentration of wealth and power shaping AI's future - from individual billionaires to corporate treasuries.Start by exploring individual billionaires, then select 'Billionaire Battery' below to see something remarkable: how long this concentrated wealth could personally fund global AI infrastructure. The answer reframes everything.

Explore the Calculators

Stay connected

Join the newsletter for insights on staying human in the age of AI, new essays and reflections on what really matters.

No spam. Unsubscribe anytime. Typically 1-2 emails per month.

AI Failure Simulator

The Backup

What happens when the AI systems millions of businesses depend on suddenly stop working. Not a prediction - a stress test.Adjust the scenarios and sliders below to explore the impact when large language model providers fail.

Explore the Calculators

Stay connected

Join the newsletter for insights on staying human in the age of AI, new essays and reflections on what really matters.

No spam. Unsubscribe anytime. Typically 1-2 emails per month.

Why 2027?

The last intuitive point

At 1 billion daily users (projected 2027), we can still grasp AI's scale through human reference points:

At 1 billion daily users, we can still say:

- London's drinking water for a month

- Powering Belgium for two months

- Earth moved equal to 130 Channel TunnelsBeyond 2027, the numbers escape intuition.

At 5 billion users (early 2030s), comparisons fail. Your mind has no handles for that scale.

The math that matters

AI adoption grows exponentially: 70-100% yearly

Efficiency improvements grow linearly: 15-30% yearlyEfficiency cannot keep pace with adoption.

The 2030s will be the decade when invisible costs surface as visible constraints in energy grids, water basins, and mineral supply chains.

Why this matters

2027 isn't the endpoint. It's the inflection point.

The last moment when we can still see clearly enough to make informed choices.Not whether to use AI. But how to use it within planetary boundaries.

Not whether to grow. But how to grow sustainably.Beyond 2027, we're navigating by instruments, not intuition.

We're managing systems too large to feel, making trade-offs too abstract to grasp instinctively.This is why these calculators exist. To show us the scale while we can still see it.

Explore the Calculators

Stay connected

Join the newsletter for insights on staying human in the age of AI, new essays and reflections on what really matters.

No spam. Unsubscribe anytime. Typically 1-2 emails per month.

KEY INSIGHTS FROM THE CALCULATORS

12 insights across 4 dimensions

AI has costs and risks that can't be waved away. Physical. Material. Economic. Systemic. Each calculator reveals a different dimension of the invisible infrastructure shaping our future.

The Physics

AI feels weightless. It isn't. Every query, every training run, every model requires massive energy and water consumption at industrial scale.

The England Power Grid

By 2027, AI could power England for 5+ months

If 1 billion people use AI daily as projected, annual energy consumption would reach approximately 280 TWh - enough to power every light, hospital, home, and factory in England (population 56 million) for 150+ days. Nearly half a year.

This isn't hypothetical future demand - it's where current trajectory leads. Every conversation with ChatGPT, every AI-generated email, every code completion adds to this total. The infrastructure powering "ask AI" rivals the energy needs of entire nations.

We think of AI as digital and weightless. The reality is industrial-scale energy consumption happening in the background of every query. The question is now whether we're prepared for energy demand at nation-state scale from a technology most people consider free and unlimited.

The Water Cost

52 Olympic pools daily, or London's supply for 37 days

Global AI at 2027 scale requires filling 19,200 Olympic swimming pools annually with fresh water, then evaporating it as waste heat. That's 52 pools per day, every day, year-round. If 1 billion people use AI daily by 2027, the water needed for cooling would supply London for 37 days annually.

This water isn't recycled - it's consumed. Every billion litres diverted to data centres is a billion litres not available for homes, farms, or ecosystems. Data centres locate where water is abundant and cheap - often in regions already facing water stress. The challenge isn't just volume - it's permanence. Traditional infrastructure has peak demand. AI infrastructure creates sustained demand that grows continuously. Water isn't infinite and AI's thirst is accelerating.

The Efficiency Paradox

More efficient chips don't reduce consumption - they enable bigger models

Each generation of AI chips is more energy-efficient than the last. Yet total AI energy consumption keeps rising. The efficiency gains get consumed by scale increases, not energy reductions.

ChatGPT-4 is more efficient than GPT-3. But it's also substantially larger. The efficiency improvement enabled the scale increase rather than reducing total power draw. When chips become twice as efficient, the industry doesn't build half as many - it builds twice as many doing four times the work.

More efficient chips make AI cheaper to run, which makes it viable for more applications, which increases total deployment. It's the total operations that matter and that number keeps growing.

The Geology

Digital technology sits on mined rock. AI's material foundation requires extracting rare earth metals from specific places, moving tonnages of earth, and creating supply chain dependencies that span continents.

The Transatlantic Tunnel

Training AI moves enough earth to dig from London to Chicago

By 2027, the global AI industry could conduct 40-50 major training runs annually. The rare earth metals and materials required would move earth equivalent to 130+ Channel Tunnels - enough to dig 6,500 km underwater from London to Chicago.

AI is heavy industry disguised as software. The digital is built from dirt. Every model, every chip, every data centre requires extracting raw material hundreds of times heavier than the final product.

This isn't abstract environmental impact - it's geological-scale extraction creating permanent landscape changes. The mountains moved to produce AI infrastructure won't return. The ecosystems disrupted don't recover. The digital revolution leaves physical scars that last for generations.

The 350 Times Multiplier

Every kilogram of AI hardware requires up to 350kg of earth moved

For every kilogram of rare earth metals in a data centre, 150-350 kilograms of earth were excavated. Building AI infrastructure requires extracting raw material hundreds of times heavier than the final product. The digital is built from dirt.

This isn't just mining - it's geological-scale extraction. The specialist chips, the servers, the infrastructure that makes AI possible all require moving mountains of earth to produce grams of refined materials.

The multiplier reveals a fundamental truth: material efficiency hasn't eliminated material intensity - it's hidden it. Each chip contains less material than previous generations. But each chip also requires more sophisticated materials, more precise extraction, and more complex refining.

Three Country Choke Point

China, Congo, Chile control the materials that make AI possible

Three countries control AI's material foundation: China (70% of rare earths), Democratic Republic of Congo (70% of cobalt), Chile (major copper). The supply chain is a single point of failure.

This isn't distributed global trade - it's concentrated geological reality. The materials that make AI possible only exist in specific places. Political decisions in three capitals can determine AI's future as much as innovation in Silicon Valley.

The concentration creates asymmetric power. Nations with rare earth deposits can shape AI development through supply decisions. Markets can optimise distribution but cannot create new deposits. Geology determines who controls AI's material supply chain and doesn't care about strategic plans or market demand.

The Economics

When individual wealth reaches nation-state scale, it shapes infrastructure independent of markets, governments, or democratic oversight. AI's future is shaped by who controls the capital.

AI Power is Increasingly Pre-Political

Infrastructure gets built before democratic systems even notice

AI infrastructure can now be built, scaled, and directed before democratic systems engage. This isn't about secrecy - it's about timing asymmetry. Governments regulate after visibility. Capital builds before visibility.

By the time political processes engage - public debate, regulatory frameworks, parliamentary oversight - the infrastructure is already live, entrenched, and costly to unwind. This creates a permanent lag between capability and governance.

The lag isn't fixable through faster regulation. Democratic processes move deliberatively by design - consultation, debate, revision, implementation. Capital moves instantly by design - decision, deployment, iteration. The speed gap is structural, not bureaucratic.

Wealth Buys Speed, Not Wisdom

The ability to fund AI quickly is not the same as the ability to guide it well

The Power Calculator shows who can fund AI infrastructure and for how long. What it can't show is whether speed of deployment equals quality of direction.

Wealth enables acceleration. It doesn't guarantee better incentive structures, clearer thinking about risks, or wisdom about unintended consequences. Speed amplifies whatever is already there: existing assumptions, existing blind spots, existing priorities.

AI development is happening at unprecedented velocity. Models that took years to train can now be deployed in months. The faster you can move, the less time exists for reflection, testing, or course correction. Acceleration without reflection is a form of risk in itself. The question isn't just "Can we build this fast?" but "Should we build this fast?"

Power Here is Systemic, Not Personal

Even if the individuals changed, the structure would stay the same

The concentration the calculator reveals emerged from economics, not conspiracy. AI requires massive capital. Technical advantages compound. Markets reward scale above everything else. These forces favour consolidation regardless of who's involved.

Replace every current AI leader tomorrow and similar patterns would likely re-emerge. The incentives are structural: compute costs billions, the best models attract the most users and network effects make dominance self-reinforcing.

Fixes aimed only at people miss what's producing the outcome. The question isn't who controls AI - it's whether a system with these built-in incentives can produce different results. Understanding the structure matters more than assigning blame.

The Backup

The calculators above reveal scale. This section reveals fragility - what happens when systems this deeply embedded have no backup plan.

Time Beats Government

Even perfect political will cannot outrun collapse when failure is fast

When a major LLM provider suddenly fails, moving government intervention from 0% to 100% in a 7-day collapse only reduces job losses from 14 million to 4.6 million. The bottleneck isn't money or authority - it's time.

Government can provide cash, confidence and coordination. But it cannot recreate the training runs that build these AI systems, rebuild the technical connections companies depend on or restore the skills workers have forgotten.

Training GPT-4 took months and hundreds of millions of pounds. Even with unlimited funding, specialist chips are scarce and training runs take months. In 2008, governments guaranteed bank deposits and banks stabilised. That was a liquidity crisis. LLM failure is a technical capacity crisis - and there is no deposit insurance for "ChatGPT will work.

Concentration Is the Real Risk

Systemic exposure explodes when multiple frontier models fail together.

The simulator shows that the single biggest driver of damage isn't speed, government response, or scale - it's concentration.When only one major provider fails, competitors partially absorb demand and losses are capped. But when the top frontier models fail together, there is no spare capacity to migrate to.In the multiple-frontier scenario, affected companies jump from hundreds of thousands to millions, economic impact increases several-fold, and recovery stretches dramatically - even with strong intervention.This isn't a failure of response. It's a failure of architecture. A system built around a few irreplaceable providers has no shock absorbers when they

fall together.

Recovery Has a Floor

Some damage can be reduced. Recovery time cannot.

The simulator shows that while early warning and government support reduce immediate losses, they do not compress recovery proportionally.Even in the most optimistic scenarios - long notice periods and strong intervention - recovery still takes months, not weeks. This is because rebuilding AI capacity depends on fixed constraints: scarce chips, training time and reconstituting complex technical systems.

You can slow collapse. You can cushion impact. But once capacity is gone, time becomes the limiting factor.

This is not a financial crisis that can be stabilized with liquidity. It is a capacity shock and capacity recovers at human and physical speeds.

Now try the calculators yourself

Stay connected

Join the newsletter for insights on staying human in the age of AI, new essays and reflections on what really matters.

No spam. Unsubscribe anytime. Typically 1-2 emails per month.

Frequently Asked Questions

Common questions about AI, the calculators and what these numbers mean.

Understanding AI

What is a LLM?

Large Language Model - AI systems like ChatGPT, Claude or Gemini that generate human-like text. Unlike traditional software that follows rules, LLMs recognize patterns across billions of examples to predict helpful responses.

What is a prompt?

Every message you send to an AI that produces a response. A 10-message conversation = 10 prompts, each requiring energy to generate.

What's the difference between a search query and an LLM prompt?

Search: Retrieves pre-indexed results. Like looking up an address in a phone book. Energy: ~0.0003 kWh.

LLM: Generates new text from scratch. Like writing a personalised letter based on everything you've read. Energy: ~0.002-0.005 kWh.

One LLM prompt uses 10-100× more energy than a search query. The question isn't "is AI bad?" - it's "are we using the right tool for the task?"

What's the difference between training and inference?

Training: Teaching the model (one-time, massive cost). Happens once when built, then amortised across billions of uses.

Inference: Using the trained model (ongoing cost). Happens every time someone asks a question. Scales with usage.

Our calculators focus on inference - the continuous, growing footprint as AI embeds into daily life.

Why AI Uses Resources

Why does AI use so much energy?

Three reasons:

(1) Thousands of GPUs consuming 300-700 watts each,

(2) Inference happens billions of times daily,

(3) Scale compounds - individual use is small, but × billions of users × continuous operation = infrastructure-scale.

Why does AI need water?

Data centers generate intense heat. Water cools them through evaporation (~2 litres per kWh). Additionally, electricity generation requires water for thermal power plants. Every litre for AI is a litre not available for crops, drinking, or ecosystems.

What are rare earth metals and why does AI need them?

17 metallic elements essential for GPUs and data center infrastructure. For every 1 kg of refined rare earth, 150-350 kg of earth must be excavated. ~70% of production is concentrated in China. The digital is built from dirt.

About the Calculators

Why do the calculators stop at 2027?

2027 is the last moment where human intuition can grasp AI's planetary scale. At 1 billion users, we can picture "London's water for a month." Beyond that, comparisons fail.

How accurate are these calculations?

Conservative and transparent - not worst-case, not best-case. We use peer-reviewed research, government data (UK DESNZ, IEA, USGS) and mid-range estimates. Full methodology in each calculator's Assumptions section. All calculations performed client-side - inspect the code yourself.

Are these worst-case scenarios?

No - they're representative of current conditions. We don't include training energy, embodied carbon, or network transmission. We don't assume universal renewable energy or maximum-intensity models. We aim for "what's actually happening at scale right now."

Current reality: Adoption growing 70-100% yearly. Efficiency improving 15-30% yearly. Efficiency isn't keeping pace.

Can I trust the data sources?

Yes. Primary sources: UK DESNZ (government energy data), IEA (global energy authority), USGS (minerals data), Forbes (verified wealth data), peer-reviewed academic research. All sources cited in calculator Assumptions sections.

Taking Action

Should I stop using AI?

No - but use it intentionally. The question is: "Is this the right tool?"

Use AI when you need synthesis, analysis or creative generation. Use search when you need quick facts (10-100× more efficient). It's about calibration, not elimination.

What can individuals do?

(1) Use the right tool (search when search works)

(2) One comprehensive prompt beats dozens of fragments

(3) Choose smaller models when sufficient (2-3× less energy)

(4) Support transparency (ask companies about their footprint)Individual choices compound. But the biggest impact: advocacy for policy that makes AI infrastructure visible and accountable.

What should policymakers consider?

Three areas:

(1) Transparency - require public reporting of energy, water, material footprints.

(2) Infrastructure planning - assess data center impacts on grids, water basins, supply chains before approval.

(3) Resource allocation - incentivize efficiency, ensure growth happens within planetary boundaries.These calculators exist to make the invisible visible. Policy follows visibility.

Explore the Calculators

Stay connected

Join the newsletter for insights on staying human in the age of AI, new essays and reflections on what really matters.

No spam. Unsubscribe anytime. Typically 1-2 emails per month.

Ten Things I Learned About Building Things That Last

Principles for resilient systems in the age of AI

We built remarkable systems.Over time, we optimised them to be faster, cheaper and more efficient. We removed backup plans because they looked wasteful. We automated skills we once practiced ourselves.None of this was reckless. Each step made sense in isolation.But taken together, these choices produced systems that work beautifully - until they don't.

And when they fail, we often discover we no longer know how to repair them.These ten principles come from studying what actually happens when complex systems break. They are not rules or demands. They are patterns - observations about what tends to endure and what often fails.They are offered quietly, for anyone building something meant to last.

PRINCIPLE 1

Use the Right-Sized Tool

Don't use a rocket ship to go to the shops.

Most work does not require the most powerful, complex or expensive solution available. It requires tools that are dependable, understandable and proportionate to the task.When we default to oversized tools, we waste resources and create fragile dependencies. When those tools fail, even simple work stops - despite never needing that level of capability in the first place.

In the age of AI

Many people now use the most powerful AI available to draft emails, fix spelling or answer routine questions. When those services slow down, change pricing or go offline, even simple tasks stop.Everyday tasks don't need extraordinary intelligence. They need dependable help.

PRINCIPLE 2

Keep a Spare Tire

Important things need backup capacity.

Systems that run at full capacity leave no room for surprise. Hospitals with no empty beds, supply chains with no inventory and organisations with no redundancy cannot absorb shocks.Slack is not inefficiency. It's a buffer that allows systems to adapt when reality diverges from the plan.

In the age of AI

Many organisations build workflows that assume constant availability of AI services. When usage spikes or providers limit access, there is no fallback - work queues up or stops entirely.The absence of slack turns strain into collapse.

PRINCIPLE 3

Avoid Lock-In

Make sure you can switch if a supplier disappears.

If your systems only work with one vendor's technology, you are not in a partnership - you are dependent. When terms change or services fail, that dependence becomes a point of failure.The ability to switch without breaking everything is the difference between inconvenience and collapse.

In the age of AI

Teams often build processes tightly around a single AI provider's tools and formats. When prices rise or access changes, switching becomes slow, expensive or impossible.What felt like convenience reveals itself as constraint.

PRINCIPLE 4

Keep Human Skills Alive

Don't let automation erase the ability to do the work.

Automation increases capacity, but it also weakens practiced skill. When tools handle tasks for long enough, people forget how to perform them independently.Technology can disappear overnight. Skills take months or years to rebuild.

In the age of AI

As writing, analysis and coding are increasingly automated, people rely less on their own judgment. When tools are unavailable, work does not merely slow - it stalls.The loss is not technological. It is human.

PRINCIPLE 5

Let Systems Fail Gradually

Things should get worse before they break.

Binary systems only have two states: working perfectly or completely broken. Resilient systems have intermediate states - slower performance, reduced features or core-only operation.Gradual failure provides warning, choice and time to respond.

In the age of AI

Some AI services queue requests when overwhelmed rather than rejecting them entirely.

Others offer 'reduced quality' modes that keep working when full capacity fails.

But many simply stop - no warning, no degraded service, just absence.Designing for partial function preserves dignity and control under stress.

PRINCIPLE 6

Keep Some Things Local

Not everything should depend on the internet.

Essential functions that require constant connectivity or remote approval

fail when those connections disappear.Local and offline capability is not a luxury.

It is what allows systems to keep working when networks fail, services shut down or providers vanish.

In the age of AI

Many everyday tools now require remote servers to operate at all. When connectivity drops, tools that worked yesterday stop working entirely.Local capability becomes visible only when it is gone.

PRINCIPLE 7

Make Dependencies Visible

Know what would break if something you use went away.

Dependencies often remain invisible until failure exposes them. What looks like a single tool may rely on layers of platforms, services and assumptions beneath it.Hidden dependencies are unmanaged risks.

In the age of AI

Organisations often believe they use several AI services, only to discover those services rely on the same underlying provider.What appeared diversified fails all at once.

PRINCIPLE 8

Don't Concentrate What Can't Fail Together

Spread critical dependencies.

Concentration reduces cost and complexity - until it synchronises failure. When everyone relies on the same components, everyone fails at once.Diversity prevents common-mode collapse.

In the age of AI

As more sectors rely on the same few AI platforms, outages or failures ripple across industries simultaneously.Efficiency becomes shared vulnerability.

PRINCIPLE 9

Keep Intelligence Close to Its Use

Don't send simple problems to distant systems.

Routine decisions and basic reasoning work best near where they are needed. Sending every task to centralised systems adds delay, dependency and fragility.Distributed capability reduces risk while preserving function.

In the age of AI

Many simple decisions are routed to remote AI services despite being predictable and local. A spell-checker that runs on your device is more resilient than one in the cloud. A chatbot that answers from local knowledge is faster than one querying distant servers.When those remote services are slow or unavailable, everyday processes stall. Keeping intelligence close restores autonomy.

PRINCIPLE 10

Plan for Recovery, Not Just Prevention

Accept that failure will happen and prepare to rebuild.

No system can prevent every failure. Services end. Providers disappear. Technology breaks.Recovery is constrained by physical limits, human capacity and time.

In the age of AI

Many organisations invest heavily in preventing AI failures, but little in rehearsing how work continues without them.When disruption arrives, the shock is not that systems failed - it's that no one planned for what comes next.

Staying human does not mean rejecting technology.

It means building systems that respect limits, preserve agency and remain workable beyond the moment they were optimised for.These principles will not prevent every failure.They make survival - and recovery - possible when failure arrives.

Why this exists

Most tools today help you decide faster, optimise better or feel reassured. This one doesn't.

Instead of offering answers, it helps you examine what you care about through four different ways of seeing - cultural, scientific, philosophical and existential. Each reveals something different.Shaped by the ideas in The Human Thread, it won't resolve, advise or validate.

It simply helps you see more clearly what kind of thing you're dealing with.

FAQ: Why not just ask an AI directly?

You can - and most AI systems will try to help by explaining, reassuring or offering options. This instrument is designed for a different purpose. It deliberately refuses to optimise or resolve.

Instead of answering your question, it examines what you've named through four distinct lenses, then synthesises what emerges. The value isn't in being told what to do - it's in seeing your question differently.

It's not smarter than a general AI. It's more disciplined. For some questions, that discipline matters.

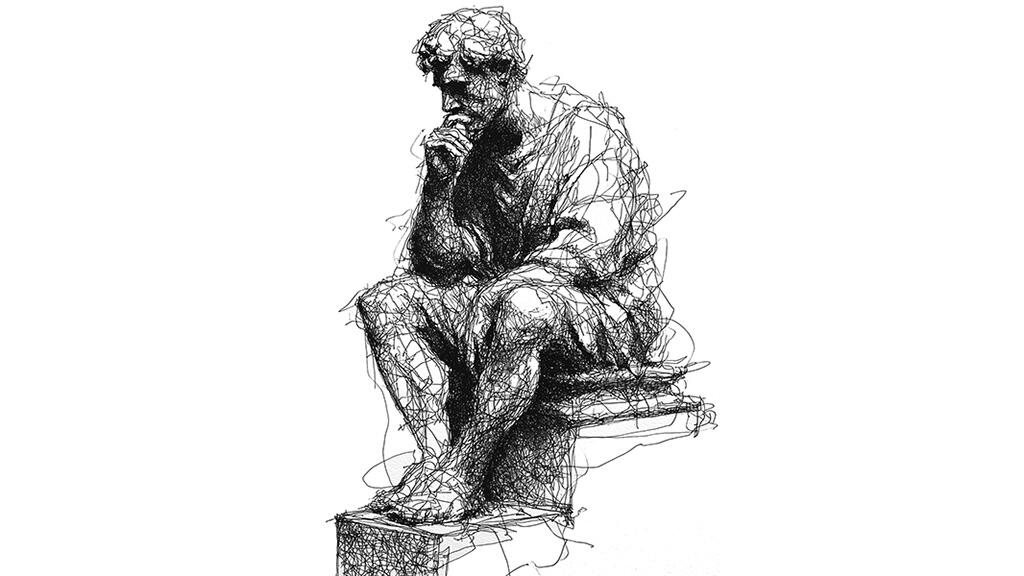

FAQ: Can I use this on my phone or tablet?

Yes. Visit the instrument in your mobile browser, then:

iPhone/iPad: Tap the Share button, then "Add to Home Screen"

Android: Tap the menu (three dots), then "Add to Home Screen" or "Install App"

It will appear as an app with The Thinker icon. No app store needed.